PUBLISHER: Global Market Insights Inc. | PRODUCT CODE: 1876622

PUBLISHER: Global Market Insights Inc. | PRODUCT CODE: 1876622

Transformer-Optimized AI Chip Market Opportunity, Growth Drivers, Industry Trend Analysis, and Forecast 2025 - 2034

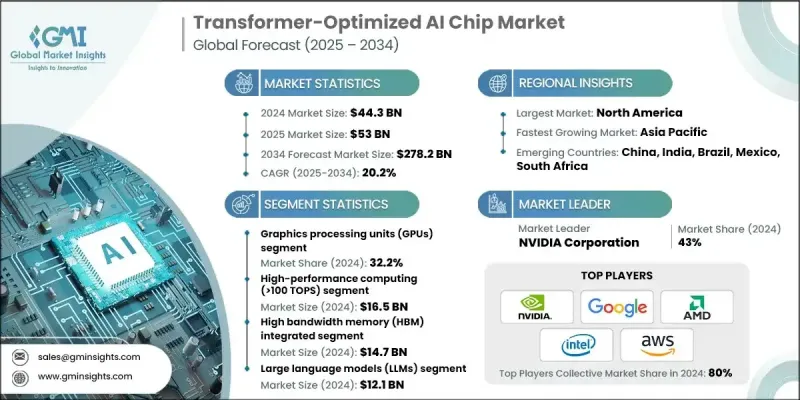

The Global Transformer-Optimized AI Chip Market was valued at USD 44.3 billion in 2024 and is estimated to grow at a CAGR of 20.2% to reach USD 278.2 billion by 2034.

The market is witnessing rapid growth as industries increasingly demand specialized hardware designed to accelerate transformer-based architectures and large language model (LLM) operations. These chips are becoming essential in AI training and inference workloads where high throughput, reduced latency, and energy efficiency are critical. The shift toward domain-specific architectures featuring transformer-optimized compute units, high-bandwidth memory, and advanced interconnect technologies is fueling adoption across next-generation AI ecosystems. Sectors such as cloud computing, edge AI, and autonomous systems are integrating these chips to handle real-time analytics, generative AI, and multi-modal applications. The emergence of chiplet integration and domain-specific accelerators is transforming how AI systems scale, enabling higher performance and efficiency. At the same time, developments in memory hierarchies and packaging technologies are reducing latency while improving computational density, allowing transformers to operate closer to processing units. These advancements are reshaping AI infrastructure globally, with transformer-optimized chips positioned at the center of high-performance, energy-efficient, and scalable AI processing.

| Market Scope | |

|---|---|

| Start Year | 2024 |

| Forecast Year | 2025-2034 |

| Start Value | $44.3 Billion |

| Forecast Value | $278.2 Billion |

| CAGR | 20.2% |

The graphics processing unit (GPU) segment held a 32.2% share in 2024. GPUs are widely adopted due to their mature ecosystem, strong parallel computing capability, and proven effectiveness in executing transformer-based workloads. Their ability to deliver massive throughput for training and inference of large language models makes them essential across industries such as finance, healthcare, and cloud-based services. With their flexibility, extensive developer support, and high computational density, GPUs remain the foundation of AI acceleration in data centers and enterprise environments.

The high-performance computing (HPC) segment exceeding 100 TOPS segment generated USD 16.5 billion in 2024, capturing a 37.2% share. These chips are indispensable for training large transformer models that require enormous parallelism and extremely high throughput. HPC-class processors are deployed across AI-driven enterprises, hyperscale data centers, and research facilities to handle demanding applications such as complex multi-modal AI, large-batch inference, and LLM training involving billions of parameters. Their contribution to accelerating computing workloads has positioned HPC chips as a cornerstone of AI innovation and infrastructure scalability.

North America Transformer-Optimized AI Chip Market held a 40.2% share in 2024. The region's leadership stems from substantial investments by cloud service providers, AI research labs, and government-backed initiatives promoting domestic semiconductor production. Strong collaboration among chip designers, foundries, and AI solution providers continues to propel market growth. The presence of major technology leaders and continued funding in AI infrastructure development are strengthening North America's competitive advantage in high-performance computing and transformer-based technologies.

Prominent companies operating in the Global Transformer-Optimized AI Chip Market include NVIDIA Corporation, Intel Corporation, Advanced Micro Devices (AMD), Samsung Electronics Co., Ltd., Google (Alphabet Inc.), Microsoft Corporation, Tesla, Inc., Qualcomm Technologies, Inc., Baidu, Inc., Huawei Technologies Co., Ltd., Alibaba Group, Amazon Web Services, Apple Inc., Cerebras Systems, Inc., Graphcore Ltd., SiMa.ai, Mythic AI, Groq, Inc., SambaNova Systems, Inc., and Tenstorrent Inc. Leading companies in the Transformer-Optimized AI Chip Market are focusing on innovation, strategic alliances, and manufacturing expansion to strengthen their global presence. Firms are heavily investing in research and development to create energy-efficient, high-throughput chips optimized for transformer and LLM workloads. Partnerships with hyperscalers, cloud providers, and AI startups are fostering integration across computing ecosystems. Many players are pursuing vertical integration by combining software frameworks with hardware solutions to offer complete AI acceleration platforms.

Table of Contents

Chapter 1 Methodology and Scope

- 1.1 Market scope and definition

- 1.2 Research design

- 1.2.1 Research approach

- 1.2.2 Data collection methods

- 1.3 Data mining sources

- 1.3.1 Global

- 1.3.2 Regional/Country

- 1.4 Base estimates and calculations

- 1.4.1 Base year calculation

- 1.4.2 Key trends for market estimation

- 1.5 Primary research and validation

- 1.5.1 Primary sources

- 1.6 Forecast model

- 1.7 Research assumptions and limitations

Chapter 2 Executive Summary

- 2.1 Industry 3600 synopsis

- 2.2 Key market trends

- 2.2.1 Chip types trends

- 2.2.2 Performance class trends

- 2.2.3 Memory trends

- 2.2.4 Application trends

- 2.2.5 End use trends

- 2.2.6 Regional trends

- 2.3 CXO perspectives: Strategic imperatives

- 2.3.1 Key decision points for industry executives

- 2.3.2 Critical success factors for market players

- 2.4 Future outlook and strategic recommendations

Chapter 3 Industry Insights

- 3.1 Industry ecosystem analysis

- 3.2 Industry impact forces

- 3.2.1 Growth drivers

- 3.2.1.1 Rising demand for LLMs and transformer architectures

- 3.2.1.2 Rapid growth of AI training and inference workloads

- 3.2.1.3 Domain-specific accelerators for transformer compute

- 3.2.1.4 Edge and distributed transformer deployment

- 3.2.1.5 Advanced packaging and memory-hierarchy innovations

- 3.2.2 Industry pitfalls and challenges

- 3.2.2.1 High Development Costs and R&D Complexity

- 3.2.2.2 Thermal Management and Power Efficiency Constraints

- 3.2.3 Market opportunities

- 3.2.3.1 Expansion into Large Language Models (LLMs) and Generative AI

- 3.2.3.2 Edge and Distributed AI Deployment

- 3.2.1 Growth drivers

- 3.3 Growth potential analysis

- 3.4 Regulatory landscape

- 3.4.1 North America

- 3.4.1.1 U.S.

- 3.4.1.2 Canada

- 3.4.2 Europe

- 3.4.3 Asia Pacific

- 3.4.4 Latin America

- 3.4.5 Middle East and Africa

- 3.4.1 North America

- 3.5 Technology landscape

- 3.5.1 Current trends

- 3.5.2 Emerging technologies

- 3.6 Pipeline analysis

- 3.7 Future market trends

- 3.8 Porter's analysis

- 3.9 PESTEL analysis

Chapter 4 Competitive Landscape, 2024

- 4.1 Introduction

- 4.2 Company market share analysis

- 4.2.1 Global

- 4.2.2 North America

- 4.2.3 Europe

- 4.2.4 Asia Pacific

- 4.2.5 Latin America

- 4.2.6 Middle East and Africa

- 4.3 Company matrix analysis

- 4.4 Competitive analysis of major market players

- 4.5 Competitive positioning matrix

- 4.6 Key developments

- 4.6.1 Merger and acquisition

- 4.6.2 Partnership and collaboration

- 4.6.3 New product launches

- 4.6.4 Expansion plans

Chapter 5 Market Estimates and Forecast, By Chip Type, 2021 - 2034 ($ Bn)

- 5.1 Key trends

- 5.2 Neural Processing Units (NPUs)

- 5.3 Graphics Processing Units (GPUs)

- 5.4 Tensor Processing Units (TPUs)

- 5.5 Application-Specific Integrated Circuits (ASICs)

- 5.6 Field-Programmable Gate Arrays (FPGAs)

Chapter 6 Market Estimates and Forecast, By Performance Class, 2021 - 2034 ($ Bn)

- 6.1 Key trends

- 6.2 High-Performance Computing (>100 TOPS)

- 6.3 Mid-Range Performance (10-100 TOPS)

- 6.4 Edge/Mobile Performance (1-10 TOPS)

- 6.5 Ultra-Low Power (<1 TOPS)

Chapter 7 Market Estimates and Forecast, By Memory, 2021 - 2034 ($ Bn)

- 7.1 Key trends

- 7.2 High Bandwidth Memory (HBM) Integrated

- 7.3 On-Chip SRAM Optimized

- 7.4 Processing-in-Memory (PIM)

- 7.5 Distributed Memory Systems

Chapter 8 Market Estimates and Forecast, By Application, 2021 - 2034 ($ Bn)

- 8.1 Key trends

- 8.2 Large Language Models (LLMs)

- 8.3 Computer Vision Transformers (ViTs)

- 8.4 Multimodal AI Systems

- 8.5 Generative AI Applications

- 8.6 Others

Chapter 9 Market Estimates and Forecast, By End Use, 2021 - 2034 ($ Bn)

- 9.1 Key trends

- 9.2 Technology & Cloud Services

- 9.3 Automotive & Transportation

- 9.4 Healthcare & Life Sciences

- 9.5 Financial Services

- 9.6 Telecommunications

- 9.7 Industrial & Manufacturing

- 9.8 Others

Chapter 10 Market Estimates and Forecast, By Region, 2021 - 2034 ($ Bn)

- 10.1 Key trends

- 10.2 North America

- 10.2.1 U.S.

- 10.2.2 Canada

- 10.3 Europe

- 10.3.1 Germany

- 10.3.2 UK

- 10.3.3 France

- 10.3.4 Italy

- 10.3.5 Spain

- 10.3.6 Netherlands

- 10.4 Asia Pacific

- 10.4.1 China

- 10.4.2 India

- 10.4.3 Japan

- 10.4.4 Australia

- 10.4.5 South Korea

- 10.5 Latin America

- 10.5.1 Brazil

- 10.5.2 Mexico

- 10.5.3 Argentina

- 10.6 Middle East and Africa

- 10.6.1 South Africa

- 10.6.2 Saudi Arabia

- 10.6.3 UAE

Chapter 11 Company Profiles

- 11.1 Advanced Micro Devices (AMD)

- 11.2 Alibaba Group

- 11.3 Amazon Web Services

- 11.4 Apple Inc.

- 11.5 Baidu, Inc.

- 11.6 Cerebras Systems, Inc.

- 11.7 Google (Alphabet Inc.)

- 11.8 Groq, Inc.

- 11.9 Graphcore Ltd.

- 11.10 Huawei Technologies Co., Ltd.

- 11.11 Intel Corporation

- 11.12 Microsoft Corporation

- 11.13 Mythic AI

- 11.14 NVIDIA Corporation

- 11.15 Qualcomm Technologies, Inc.

- 11.16 Samsung Electronics Co., Ltd.

- 11.17 SiMa.ai

- 11.18 SambaNova Systems, Inc.

- 11.19 Tenstorrent Inc.

- 11.20 Tesla, Inc.