PUBLISHER: Mordor Intelligence | PRODUCT CODE: 1851110

PUBLISHER: Mordor Intelligence | PRODUCT CODE: 1851110

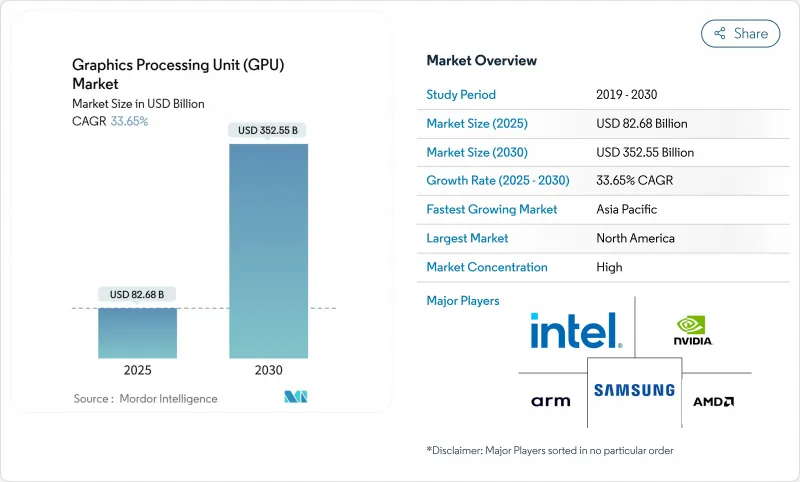

Graphics Processing Unit (GPU) - Market Share Analysis, Industry Trends & Statistics, Growth Forecasts (2025 - 2030)

The graphics processing unit market size stands at USD 82.68 billion in 2025 and is forecast to reach USD 352.55 billion by 2030, delivering a 33.65% CAGR.

The surge reflects an industry pivot from graphics-only workloads to AI-centric compute, where GPUs function as the workhorses behind generative AI training, hyperscale inference, cloud gaming, and heterogeneous edge systems. Accelerated sovereign AI initiatives, corporate investment in domain-specific models, and the rapid maturation of 8K, ray-traced gaming continue to deepen demand for high-bandwidth devices. Tight advanced-node capacity, coupled with export-control complexity, is funneling orders toward multi-foundry supply strategies. Meanwhile, chiplet-based designs and open instruction sets are introducing new competitive vectors without dislodging the field's current concentration.

Global Graphics Processing Unit (GPU) Market Trends and Insights

Generative-AI Model Training GPU Intensity

Large-parameter transformer models routinely exceed 100 billion parameters, forcing enterprises to operate tens of thousands of GPUs in parallel for months-long training runs, elevating tensor throughput above traditional graphics metrics. High-bandwidth memory, lossless interconnects, and liquid-cooling racks have become standard purchase criteria. Healthcare, finance, and manufacturing firms now mirror hyperscalers by provisioning dedicated super-clusters for domain models, a pattern that broadens the graphics processing unit market's end-user base. Mixture-of-experts architectures amplify the demand, as workflows orchestrate heterogeneous GPU pools to handle context-specific shards. Power-density constraints inside legacy data halls further accelerate migration to purpose-built AI pods.

Sovereign-AI" Datacenter Build-outs

Governments view domestic AI compute as a strategic asset akin to energy or telecom backbone. Canada allocated USD 2 billion for a national AI compute strategy focused on GPU-powered supercomputers.India's IndiaAI Mission plans 10,000+ GPUs for indigenous language models. South Korea is stockpiling similar volumes to secure research parity. Such projects convert public budgets into multi-year purchase schedules, stabilizing baseline demand across the graphics processing unit market. Region-specific model training-ranging from industrial automation in the EU to energy analytics in the Gulf-expands architectural requirements beyond datacenter SKUs into ruggedized edge accelerators.

Export-Control Limits on <= 7 nm GPU Sales

The United States introduced tiered licensing for advanced computing ICs, effectively curbing shipments of state-of-the-art GPUs to China.NVIDIA booked a USD 4.5 billion charge tied to restricted H20 accelerators, illustrating revenue sensitivity to licensing shifts. Chinese firms responded by fast-tracking domestic GPU projects, potentially diluting future demand for U.S. IP. The bifurcated supply chain forces vendors to maintain multiple silicon variants, lifting operating costs and complicating inventory planning throughout the graphics processing unit market.

Other drivers and restraints analyzed in the detailed report include:

- AR/VR and AI-Led Heterogeneous Computing Demand

- Cloud-Gaming Service Roll-outs

- Chronic Advanced-Node Supply Constraints

For complete list of drivers and restraints, kindly check the Table Of Contents.

Segment Analysis

Discrete boards controlled 62.7% of the graphics processing unit market share in 2024, translating to the largest slice of the graphics processing unit market size for that year. Demand concentrates on high-bandwidth memory, dedicated tensor cores, and scalable interconnects suited for AI clusters. Enterprises favor modularity, enabling phased rack upgrades without motherboard swaps. Gaming continues to validate high-end variants by adopting ray tracing and 8K assets that integrated GPUs cannot sustain.

Chiplet adoption is lowering the cost per performance tier and improving yields by stitching smaller dies. AMD's multi-chiplet layout and NVIDIA's NVLink Fusion both extend discrete relevance into semi-custom server designs. Meanwhile, integrated GPUs remain indispensable for mobile and entry desktops where thermal budgets dominate. The graphics processing unit industry thus segments along a mobility-versus-throughput spectrum rather than a pure cost axis.

Servers and data-center accelerators are projected to register the fastest 37.6% CAGR through 2030, underpinning the swelling graphics processing unit market. Hyperscale operators provision entire AI factories holding tens of thousands of boards interconnected via optical NVLink or PCIe 6.0 fabrics. Sustained procurement contracts from cloud providers, public research consortia, and pharmaceutical pipelines jointly anchor demand at multi-year horizons.

Gaming systems remain the single largest installed-base category, but their growth curve is modest next to cloud and enterprise AI. Automotive, industrial robotics, and medical imaging represent smaller yet high-margin verticals thanks to functional-safety and long-life support requirements. Collectively, these edge cohorts diversify the graphics processing unit industry's revenue away from cyclical consumer cycles.

Graphics Processing Unit (GPU) Market is Segmented by GPU Type (Discrete GPU, Integrated GPU, and Others), Device Application (Mobile Devices and Tablets, Pcs and Workstations, and More), Deployment Model (On-Premises and Cloud), Instruction-Set Architecture (x86-64, Arm, and More), and by Geography. The Market Forecasts are Provided in Terms of Value (USD).

Geography Analysis

North America captured 43.7% graphics processing unit market share in 2024, anchored by Silicon Valley chip design, hyperscale cloud campuses, and deep venture funding pipelines. The region benefits from tight integration between semiconductor IP owners and AI software start-ups, accelerating time-to-volume for next-gen boards. Export-control regimes do introduce compliance overhead yet simultaneously channel domestic subsidies into advanced-node fabrication and packaging lines.

Asia-Pacific is the fastest-growing territory, expected to post a 37.4% CAGR to 2030. China accelerates indigenous GPU programs under technology-sovereignty mandates, while India's IndiaAI Mission finances national GPU facilities and statewide language models. South Korea's 10,000-GPU state compute hub and Japan's AI disaster-response initiatives extend regional demand beyond commercial clouds into public-sector supercomputing.

Europe balances stringent AI governance with industrial modernization goals. Germany partners with NVIDIA to build an industrial AI cloud targeting automotive and machinery digital twins. France, Italy, and the UK prioritize multilingual LLMs and fintech risk analytics, prompting localized GPU clusters housed in high-efficiency, district-cooled data centers. The Middle East, led by Saudi Arabia and the UAE, is investing heavily in AI factories to diversify economies, further broadening the graphics processing unit market footprint across emerging geographies.

- NVIDIA Corporation

- Advanced Micro Devices Inc.

- Intel Corporation

- Apple Inc.

- Samsung Electronics Co. Ltd.

- Qualcomm Technologies Inc.

- Arm Ltd.

- Imagination Technologies Group

- EVGA Corp.

- Sapphire Technology Ltd.

- ASUStek Computer Inc.

- Micro-Star International (MSI)

- Gigabyte Technology Co. Ltd.

- Zotac Technology Ltd.

- Palit Microsystems Ltd.

- Leadtek Research Inc.

- Colorful Technology Co. Ltd.

- Amazon Web Services (Elastic GPUs)

- Google LLC (Cloud TPU/GPU)

- Huawei HiSilicon

- Graphcore Ltd.

Additional Benefits:

- The market estimate (ME) sheet in Excel format

- 3 months of analyst support

TABLE OF CONTENTS

1 INTRODUCTION

- 1.1 Study Assumptions and Market Definition

- 1.2 Scope of the Study

2 RESEARCH METHODOLOGY

3 EXECUTIVE SUMMARY

4 MARKET LANDSCAPE

- 4.1 Market Overview

- 4.2 Market Drivers

- 4.2.1 Evolving graphics realism in AAA gaming

- 4.2.2 AR/VR and AI-led heterogeneous computing demand

- 4.2.3 Cloud-gaming service roll-outs

- 4.2.4 Generative-AI model training GPU intensity

- 4.2.5 Sovereign-AI" datacenter build-outs"

- 4.2.6 Chiplet-based custom GPU SKUs

- 4.3 Market Restraints

- 4.3.1 High upfront capex and BOM costs

- 4.3.2 Chronic advanced-node supply constraints

- 4.3.3 Export-control limits on greater than or equal to 7 nm GPU sales

- 4.3.4 Cooling/power-density limits in hyperscale DCs

- 4.4 Supply-Chain Analysis

- 4.5 Regulatory Landscape

- 4.6 Technological Outlook

- 4.7 Porter's Five Force Analysis

- 4.7.1 Bargaining Power of Suppliers

- 4.7.2 Bargaining Power of Buyers

- 4.7.3 Threat of New Entrants

- 4.7.4 Threat of Substitutes

- 4.7.5 Intensity of Competitive Rivalry

- 4.8 Assesment of Macroeconomic Factors on the Market

5 MARKET SIZE AND GROWTH FORECASTS (VALUE)

- 5.1 By GPU Type

- 5.1.1 Discrete GPU

- 5.1.2 Integrated GPU

- 5.1.3 Others

- 5.2 By Device Application

- 5.2.1 Mobile Devices and Tablets

- 5.2.2 PCs and Workstations

- 5.2.3 Servers and Data-center Accelerators

- 5.2.4 Gaming Consoles and Handhelds

- 5.2.5 Automotive / ADAS

- 5.2.6 Other Embedded and Edge Devices

- 5.3 By Deployment Model

- 5.3.1 On-Premise

- 5.3.2 Cloud

- 5.4 By Instruction-Set Architecture

- 5.4.1 x86-64

- 5.4.2 Arm

- 5.4.3 RISC-V and OpenGPU

- 5.4.4 Others (Power, MIPS)

- 5.5 By Geography

- 5.5.1 North America

- 5.5.1.1 United States

- 5.5.1.2 Canada

- 5.5.1.3 Mexico

- 5.5.2 South America

- 5.5.2.1 Brazil

- 5.5.2.2 Argentina

- 5.5.2.3 Rest of South America

- 5.5.3 Europe

- 5.5.3.1 Germany

- 5.5.3.2 United Kingdom

- 5.5.3.3 France

- 5.5.3.4 Italy

- 5.5.3.5 Rest of Europe

- 5.5.4 Asia-Pacific

- 5.5.4.1 China

- 5.5.4.2 Japan

- 5.5.4.3 India

- 5.5.4.4 South Korea

- 5.5.4.5 Southeast Asia

- 5.5.4.6 Rest of Asia-Pacific

- 5.5.5 Middle East and Africa

- 5.5.5.1 Middle East

- 5.5.5.1.1 Saudi Arabia

- 5.5.5.1.2 United Arab Emirates

- 5.5.5.1.3 Turkey

- 5.5.5.1.4 Rest of Middle East

- 5.5.5.2 Africa

- 5.5.5.2.1 South Africa

- 5.5.5.2.2 Egypt

- 5.5.5.2.3 Nigeria

- 5.5.5.2.4 Rest of Africa

- 5.5.1 North America

6 COMPETITIVE LANDSCAPE

- 6.1 Market Concentration

- 6.2 Strategic Moves

- 6.3 Market Share Analysis

- 6.4 Company Profiles (includes Global level Overview, Market level overview, Core Segments, Financials as available, Strategic Information, Market Rank/Share for key companies, Products and Services, and Recent Developments)

- 6.4.1 NVIDIA Corporation

- 6.4.2 Advanced Micro Devices Inc.

- 6.4.3 Intel Corporation

- 6.4.4 Apple Inc.

- 6.4.5 Samsung Electronics Co. Ltd.

- 6.4.6 Qualcomm Technologies Inc.

- 6.4.7 Arm Ltd.

- 6.4.8 Imagination Technologies Group

- 6.4.9 EVGA Corp.

- 6.4.10 Sapphire Technology Ltd.

- 6.4.11 ASUStek Computer Inc.

- 6.4.12 Micro-Star International (MSI)

- 6.4.13 Gigabyte Technology Co. Ltd.

- 6.4.14 Zotac Technology Ltd.

- 6.4.15 Palit Microsystems Ltd.

- 6.4.16 Leadtek Research Inc.

- 6.4.17 Colorful Technology Co. Ltd.

- 6.4.18 Amazon Web Services (Elastic GPUs)

- 6.4.19 Google LLC (Cloud TPU/GPU)

- 6.4.20 Huawei HiSilicon

- 6.4.21 Graphcore Ltd.

7 MARKET OPPORTUNITIES AND FUTURE OUTLOOK

- 7.1 White-space and Unmet-need Assessment