PUBLISHER: Mordor Intelligence | PRODUCT CODE: 1910814

PUBLISHER: Mordor Intelligence | PRODUCT CODE: 1910814

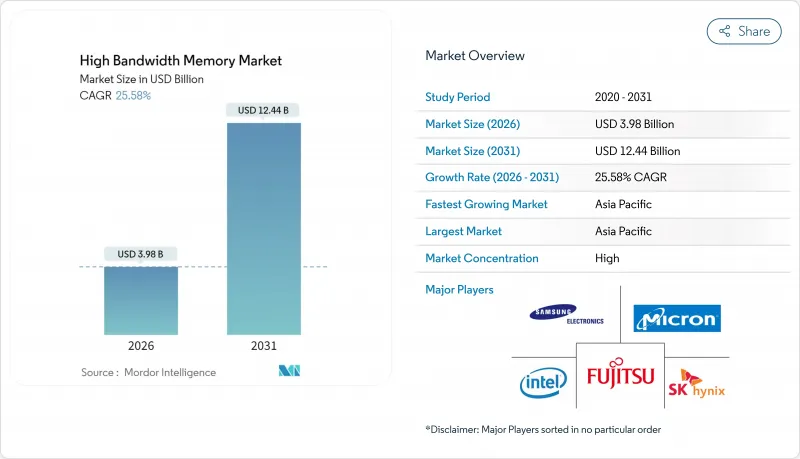

High Bandwidth Memory - Market Share Analysis, Industry Trends & Statistics, Growth Forecasts (2026 - 2031)

The high bandwidth memory market is expected to grow from USD 3.17 billion in 2025 to USD 3.98 billion in 2026 and is forecast to reach USD 12.44 billion by 2031 at 25.58% CAGR over 2026-2031.

Sustained demand for AI-optimized servers, wider DDR5 adoption, and aggressive hyperscaler spending continued to accelerate capacity expansions across the semiconductor value chain in 2025. Over the past year, suppliers concentrated on TSV yield improvement, while packaging partners invested in new CoWoS lines to ease substrate shortages. Automakers deepened engagements with memory vendors to secure ISO 26262-qualified HBM for Level 3 and Level 4 autonomous platforms. Asia-Pacific's fabrication ecosystem retained production leadership after Korean manufacturers committed multibillion-dollar outlays aimed at next-generation HBM4E ramps.

Global High Bandwidth Memory Market Trends and Insights

AI-Server Proliferation and GPU Attach Rates

Rapid growth in large-scale language models drove a seven-fold rise in HBM per GPU requirements compared with traditional HPC devices during 2024. NVIDIA's H100 combined 80 GB of HBM3, delivering 3.35 TB/s, while the H200 was sampled in early 2025 with 141 GB of HBM3E at 4.8 TB/s. Order backlogs locked in the majority of supplier capacity through 2026, forcing data-center operators to pre-purchase inventory and co-invest in packaging lines.

Data-Center Shift to DDR5 and 2.5-D Packaging

Hyperscalers moved workloads from DDR4 to DDR5 to obtain 50% better performance per watt, simultaneously adopting 2.5-D integration that links AI accelerators to stacked memory on silicon interposers. Dependence on a single packaging platform heightened supply-chain risk when substrate shortages delayed GPU launches throughout 2024.

TSV Yield Losses Above 12-Layer Stacks

Yield fell below 70% on 16-high HBM stacks because thermal cycling induced copper-migration failures within TSVs. Manufacturers pursued thermal through-silicon via designs and novel dielectric materials to stabilize reliability, but commercialization remains two years away.

Other drivers and restraints analyzed in the detailed report include:

- Edge-AI Inference in Automotive ADAS

- Hyperscaler Preference for Silicon Interposer Stacks

- Limited CoWoS/SoIC Advanced-Packaging Capacity

For complete list of drivers and restraints, kindly check the Table Of Contents.

Segment Analysis

The server category led the high bandwidth memory market with a 67.80% revenue share in 2025, reflecting hyperscale operators' pivot to AI servers that each integrate eight to twelve HBM stacks. Demand accelerated after cloud providers launched foundation-model services that rely on per-GPU bandwidth above 3 TB/s. Energy efficiency targets in 2025 favored stacked DRAM because it delivered superior performance-per-watt over discrete solutions, enabling data-center operators to stay within power envelopes. An enterprise refresh cycle began as companies replaced DDR4-based nodes with HBM-enabled accelerators, extending purchasing commitments into 2027.

The automotive and transportation segment, while smaller today, recorded the fastest growth with a projected 34.18% CAGR through 2031. Chipmakers collaborated with Tier 1 suppliers to embed functional-safety features that meet ASIL D requirements. Level 3 production programs in Europe and North America entered limited rollout in late 2024, each vehicle using memory bandwidth previously reserved for data-center inference clusters. As over-the-air update strategies matured, vehicle manufacturers began treating cars as edge servers, further sustaining HBM attach rates.

HBM3 accounted for 45.70% revenue in 2025 after widespread adoption in AI training GPUs. Sampling of HBM3E started in Q1 2024, and first-wave production ran at pin speeds above 9.2 Gb/s. Performance gains reached 1.2 TB/s per stack, reducing the number of stacks needed for the target bandwidth and lowering package thermal density.

HBM3E's 40.90% forecast CAGR is underpinned by Micron's 36 GB, 12-high product that entered volume production in mid-2025, targeting accelerators with model sizes up to 520 billion parameters. Looking forward, the HBM4 standard published in April 2025 doubles channels per stack and raises aggregate throughput to 2 TB/s, setting the stage for multi-petaflop AI processors.

High Bandwidth Memory (HBM) Market is Segmented by Application (Servers, Networking, High-Performance Computing, Consumer Electronics, and More), Technology (HBM2, HBM2E, HBM3, HBM3E, and HBM4), Memory Capacity Per Stack (4 GB, 8 GB, 16 GB, 24 GB, and 32 GB and Above), Processor Interface (GPU, CPU, AI Accelerator/ASIC, FPGA, and More), and Geography (North America, South America, Europe, Asia-Pacific, and Middle East and Africa).

Geography Analysis

Asia-Pacific accounted for 41.00% of 2025 revenue, anchored by South Korea, where SK Hynix and Samsung controlled more than 80% of production lines. Government incentives announced in 2024 supported an expanded fabrication cluster scheduled to open in 2027. Taiwan's TSMC maintained a packaging monopoly for leading-edge CoWoS, tying memory availability to local substrate supply and creating a regional concentration risk.

North America's share grew as Micron secured USD 6.1 billion in CHIPS Act funding to build advanced DRAM fabs in New York and Idaho, with pilot HBM runs expected in early 2026. Hyperscaler capital expenditures continued to drive local demand, although most wafers were still processed in Asia before final module assembly in the United States.

Europe entered the market through automotive demand; German OEMs qualified HBM for Level 3 driver-assist systems shipping in late 2024. The EU's semiconductor strategy remained R&D-centric, favoring photonic interconnect and neuromorphic research that could unlock future high bandwidth memory market expansion. Middle East and Africa stayed in an early adoption phase, yet sovereign AI datacenter projects initiated in 2025 suggested a coming uptick in regional demand.

- Samsung Electronics Co., Ltd.

- SK hynix Inc.

- Micron Technology, Inc.

- Intel Corporation

- Advanced Micro Devices, Inc.

- Nvidia Corporation

- Taiwan Semiconductor Manufacturing Company Limited

- ASE Technology Holding Co., Ltd.

- Amkor Technology, Inc.

- Powertech Technology Inc.

- United Microelectronics Corporation

- GlobalFoundries Inc.

- Applied Materials Inc.

- Marvell Technology, Inc.

- Rambus Inc.

- Cadence Design Systems, Inc.

- Synopsys, Inc.

- Siliconware Precision Industries Co., Ltd.

- JCET Group Co., Ltd.

- Chipbond Technology Corporation

- Cadence Design Systems Inc.

- Broadcom Inc.

- Celestial AI

- ASE-SPIL (Silicon Products)

- Graphcore Limited

Additional Benefits:

- The market estimate (ME) sheet in Excel format

- 3 months of analyst support

TABLE OF CONTENTS

1 INTRODUCTION

- 1.1 Study Assumptions and Market Definition

- 1.2 Scope of the Study

2 RESEARCH METHODOLOGY

3 EXECUTIVE SUMMARY

4 MARKET LANDSCAPE

- 4.1 Market Overview

- 4.2 Market Drivers

- 4.2.1 AI-server proliferation and GPU attach rates

- 4.2.2 Data-center shift to DDR5 and 2.5-D packaging

- 4.2.3 Edge-AI inference in automotive ADAS

- 4.2.4 Hyperscaler preference for silicon interposer stacks

- 4.2.5 Localized memory production subsidies (KR, US, JP)

- 4.2.6 Photonics-ready HBM road-maps (HBM-P)

- 4.3 Market Restraints

- 4.3.1 TSV yield losses above 12-layer stacks

- 4.3.2 Limited CoWoS/SoIC advanced-packaging capacity

- 4.3.3 Thermal throttling in >1 TB/s bandwidth devices

- 4.3.4 Geo-political export controls on AI accelerators

- 4.4 Value Chain Analysis

- 4.5 Regulatory Landscape

- 4.6 Technological Outlook

- 4.7 Porter's Five Forces Analysis

- 4.7.1 Bargaining Power of Suppliers

- 4.7.2 Bargaining Power of Buyers

- 4.7.3 Threat of New Entrants

- 4.7.4 Threat of Substitutes

- 4.7.5 Intensity of Competitive Rivalry

- 4.8 DRAM Market Analysis

- 4.8.1 DRAM Revenue and Demand Forecast

- 4.8.2 DRAM Revenue by Geography

- 4.8.3 Current Pricing of DDR5 Products

- 4.8.4 List of DDR5 Product Manufacturers

- 4.9 Impact of Macroeconomic Factors

5 MARKET SIZE AND GROWTH FORECASTS (VALUE)

- 5.1 By Application

- 5.1.1 Servers

- 5.1.2 Networking

- 5.1.3 High-Performance Computing

- 5.1.4 Consumer Electronics

- 5.1.5 Automotive and Transportation

- 5.2 By Technology

- 5.2.1 HBM2

- 5.2.2 HBM2E

- 5.2.3 HBM3

- 5.2.4 HBM3E

- 5.2.5 HBM4

- 5.3 By Memory Capacity per Stack

- 5.3.1 4 GB

- 5.3.2 8 GB

- 5.3.3 16 GB

- 5.3.4 24 GB

- 5.3.5 32 GB and Above

- 5.4 By Processor Interface

- 5.4.1 GPU

- 5.4.2 CPU

- 5.4.3 AI Accelerator / ASIC

- 5.4.4 FPGA

- 5.4.5 Others

- 5.5 By Geography

- 5.5.1 North America

- 5.5.1.1 United States

- 5.5.1.2 Canada

- 5.5.1.3 Mexico

- 5.5.2 South America

- 5.5.2.1 Brazil

- 5.5.2.2 Rest of South America

- 5.5.3 Europe

- 5.5.3.1 Germany

- 5.5.3.2 France

- 5.5.3.3 United Kingdom

- 5.5.3.4 Rest of Europe

- 5.5.4 Asia-Pacific

- 5.5.4.1 China

- 5.5.4.2 Japan

- 5.5.4.3 India

- 5.5.4.4 South Korea

- 5.5.4.5 Rest of Asia-Pacific

- 5.5.5 Middle East and Africa

- 5.5.5.1 Middle East

- 5.5.5.1.1 Saudi Arabia

- 5.5.5.1.2 United Arab Emirates

- 5.5.5.1.3 Turkey

- 5.5.5.1.4 Rest of Middle East

- 5.5.5.2 Africa

- 5.5.5.2.1 South Africa

- 5.5.5.2.2 Rest of Africa

- 5.5.5.1 Middle East

- 5.5.1 North America

6 COMPETITIVE LANDSCAPE

- 6.1 Market Concentration

- 6.2 Strategic Moves

- 6.3 Market Share Analysis

- 6.4 Company Profiles (includes Global-level Overview, Market-level Overview, Core Segments, Financials, Strategic Information, Market Rank/Share, Products and Services, Recent Developments)

- 6.4.1 Samsung Electronics Co., Ltd.

- 6.4.2 SK hynix Inc.

- 6.4.3 Micron Technology, Inc.

- 6.4.4 Intel Corporation

- 6.4.5 Advanced Micro Devices, Inc.

- 6.4.6 Nvidia Corporation

- 6.4.7 Taiwan Semiconductor Manufacturing Company Limited

- 6.4.8 ASE Technology Holding Co., Ltd.

- 6.4.9 Amkor Technology, Inc.

- 6.4.10 Powertech Technology Inc.

- 6.4.11 United Microelectronics Corporation

- 6.4.12 GlobalFoundries Inc.

- 6.4.13 Applied Materials Inc.

- 6.4.14 Marvell Technology, Inc.

- 6.4.15 Rambus Inc.

- 6.4.16 Cadence Design Systems, Inc.

- 6.4.17 Synopsys, Inc.

- 6.4.18 Siliconware Precision Industries Co., Ltd.

- 6.4.19 JCET Group Co., Ltd.

- 6.4.20 Chipbond Technology Corporation

- 6.4.21 Cadence Design Systems Inc.

- 6.4.22 Broadcom Inc.

- 6.4.23 Celestial AI

- 6.4.24 ASE-SPIL (Silicon Products)

- 6.4.25 Graphcore Limited

7 MARKET OPPORTUNITIES AND FUTURE OUTLOOK

- 7.1 White-space and Unmet-need Assessment